Twitter is a huge resource of raw data where you can explore and find interesting insights. Millions of companies, politicians and journalists are using this social media tool to interact with

their audience and mining the data to learn more about trends and users.

This post is the first in the series dedicated to mining twitter data using python. I focus on this first part on collecting data from Twitter using the API to build our dataset,

in the following parts I’ll show how to process the data and analyze it.

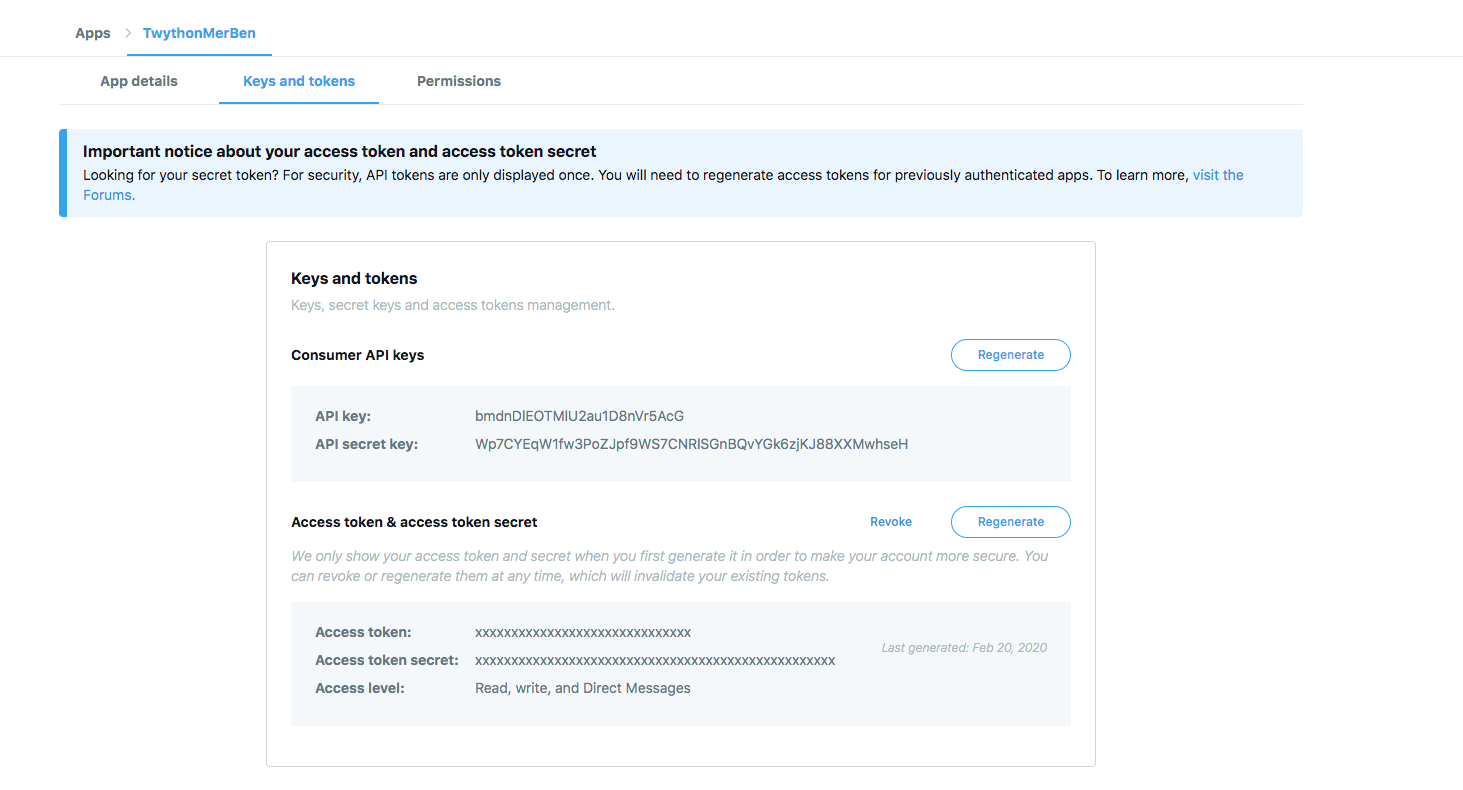

1 - Register the App

To have access to Twitter data programmatically, we need first to create an app that interacts with the Twitter API.

To do so, follow this link https://developer.twitter.com/en/apps/ and create an APP as shown in the screenshot below :(you need to be connected to your Twitter account).

Twitter provides two sorts of APIs, REST Search API and Streaming API. The first one enables you to search historical data from Twitter’s search index (up to 7 days for standard free accounts),

and the second one is a real-time streaming API, it starts giving data from the point of query. Depending on your own need, you need to choose one of these API for your application.

In this article, I’ll be covering the REST Search API, it has a few limitations that you need to be aware of before you use it,

I'll provide you with some techniques you can use to maximize the resources the API gives you.

From the official documentation, we can notice the following limitations :

" Before getting involved, it’s important to know that the Search API is focused on relevance and not completeness.

This means that some Tweets and users may be missing from search results. If you want to match for completeness, you should consider using a Streaming API instead."

Reference the Search API

"Please note that Twitter’s search service and, by extension, the Search API is not meant to be an exhaustive source of tweets. Not all tweets will be indexed or made available via the search interface."

Reference the Search API

Also, Twitter has set the rate of usage limit of the API’s, you can find it detailed in the following page ,API Rate Limit,

we can see that the Search API is limited to 180 Requests per 15 min window per user-authentication.

So, if we do some math here, using the Search API with the user-authentication method it will give about of 18,000 tweets/15 min window , since the limited of every request is set to 100 tweets for the Standard search API.

Twitter Search API provides another authentication method dedicated to applications. This method has higher usage limits, precisely up to 450 Requests per 15 min window.

The AppAuthHandler also sets wait_on_rate_limit and wait_on_rate_limit_notify

flags to True, which means the API calls auto wait when it reaches the limit and resumes after the expiration of the window automatically. That makes the code simple and clean.

2 - let's start coding

The code sample below shows how to use the App Auth using Twython library, Twitter APIs support also other clients-libraries written in different languages, you can find more details in the official documentation.

#!/usr/bin/env python

# -*- coding: utf-8 -*-

from twython import Twython

import configparser

import sys

import json

import os

config = configparser.ConfigParser()

# reading APP_KEY and APP_SECRET from the config.ini file

# update with your credentials before running the app

config.read(os.path.dirname(__file__) + '../config.ini')

APP_KEY = config['twitter_connections_details']['APP_KEY']

APP_SECRET = config['twitter_connections_details']['APP_SECRET']

api = Twython(APP_KEY, APP_SECRET)

if not api:

print('Authentication failed!.')

sys.exit(-1)

The api variable is now the object we are going to use for the search operations in Twitter.

As an example, I’ve taken the city where I live in a search query by providing the coordinates (Latitude, Longitude) of a central point with a radius of 12 miles.

Below my search query, I used, you can find all the parameters of the Search API in the the official documentation:

search_query = "geocode:45.533471,-73.552805,12mi"

Unlike the Stream API that gives you the data as soon as it is available and matches your search criteria, the Search API is stateless, which means it doesn’t carry the search context over repeated calls.

What we need here is to provide the server with information about the last result batch received, so that the server can send the next batch of results (this is the pagination concept).

The Search API accepts two parameters max_id and since_id which serve as upper and lower bounds of the unique IDs of the tweets.

Below the code I’ve used:

tweets = [] # list where to store data

tweets_per_request = 100

max_tweets_to_be_fetched = 10000 # number of tweets you want fetch (optional)

search_query = "geocode:45.533471,-73.552805,12mi"

max_id = None

since_id = None

count_tweets = 0

while count_tweets < max_tweets_to_be_fetched: # we continue our calls till we get the number of tweets desired

try:

if max_id: # for the first page of result received (max_id = 0)

if not since_id:

tweets_fetched = api.search(q=search_query, count=tweets_per_request)

else:

tweets_fetched = api.search(q=search_query, count=tweets_per_request, since_id=since_id)

else: # for the following pages max_id = some number

if not since_id:

tweets_fetched = api.search(q=search_query, count=tweets_per_request, max_id=max_id)

else:

tweets_fetched = api.search(q=search_query, count=tweets_per_request, max_id=max_id, since_id=since_id)

if not tweets_fetched:

print("No more tweets found")

break

for tweet in tweets_fetched["statuses"]:

tweets.append(tweet)

count_tweets += len(tweets_fetched['statuses'])

sys.stdout.write('\r Number of downloaded Tweets: {0} '.format(count_tweets))

# get the max_id

max_id = tweets_fetched['search_metadata']["max_id_str"]

except Exception as e:

# Just exit if any error

print("some error : " + str(e))

break

# writing tweets to json file

with open('twitter_data_set.json', 'w') as file:

json.dump(tweets, file)

The above code will write the data in JSON file twitter_data_set.json, it uses the AppAuth method and it can fetch 45K of tweets/15 min.

As you can see, I’ve used the max_id to fetch the next page of a result set. Also, the above code does not stop until it fetches the number results we want.

You can find the full code in my Git repository, you need to put your own consumer_key and a consumer_secret in the config.ini file.

Summary

In this blog post, I’ve explained how you can interact with Twitter REST Search API using Twython, I've also highlighted some of its limitations and how you can optimally maximize your extraction rate by using the AppAuthHandler. We have now extracted our dataset, in the next blog posts, I’ll cover the data processing setp.